H2C(Unencrypted HTTP/2) Migration Guide in an Internal Environment¶

This blog records some investigations during migration HTTP1.1 services to HTTP2 h2c. It lists some possible problems encountered during investigation. The background is that using HTTP/2 to communicate internally will significantly improve the efficiency. To do so, h2c(unencrypted http2) solution is better as it prevents the encryption-decryption between peers.

Why H2C¶

For internal communication between a host-level proxy instance and services inside container, HTTP/2 improves a lot comparing with traditional HTTP1.1 due to the multiplexing feature.

Besides that, in an internal cluster, the securities provided by TLS are a bit redundant as usually we treat the internal system is safe. Hence, the h2 solution which requires TLS is not a ideal way for internal usages and we pick up h2c for internal services.

HTTP/2 Protocol Negotiation¶

HTTP2 has two kinds of protocol negotiation as h2 and h2c:

- h2: encrypted http2 negotiation based on TLS named

ALPN(application layer protocol negotiation) - h2c: prior knowledge(rfc7540#3.4) or upgrading mechanism(rfc3270#6.7)

The ALPN is done during the handshake of TLS connection, which is not the topic of this blog.

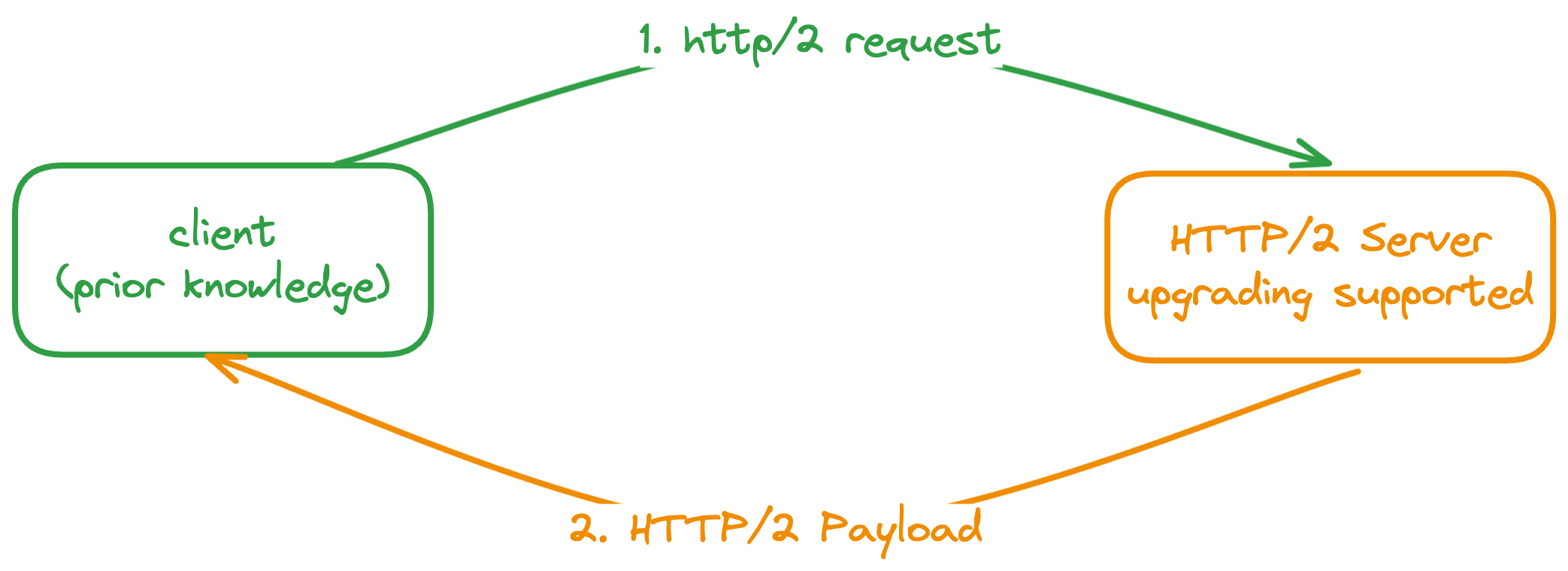

Prior Knowledge¶

The client(proxy) sends an HTTP/2 request directly if it knows the server supports it already. That's what the prior knowledge refers. But how could the client knows this?

In an internal environment, the services routing is done via the service registering-discovering mechanism, and it provides the possibility to support the h2c prior knowledge. For a proxy, when it forwards the requests to the destination, it could query from the service registry to see whether the peer supports the HTTP/2, if so the HTTP/2 request is sent out via h2c.

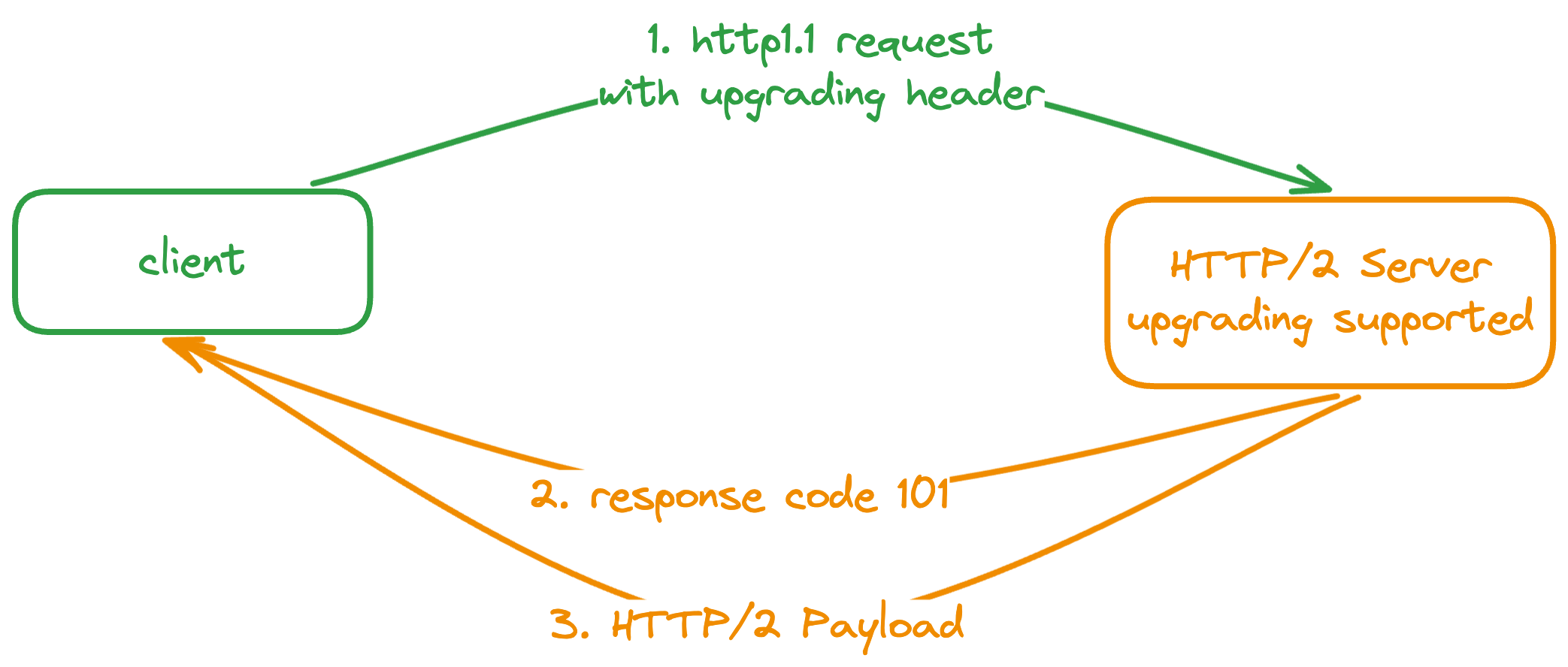

Upgrading Mechanism¶

Client sends a request via HTTP1.1 along with a upgrading header such as Upgrade: h2c to a server, which may support the HTTP/2.

If the server supports the HTTP/2, it's likely support the upgrading feature from HTTP1.1 to HTTP/2. To upgrade, it will send a response with 101(switching protocol) and then following with a HTTP/2 format packets.

Note that a HTTP/2 server doesn't always support such a feature, as the rfc7230 section 6.7 reads:

A server MAY ignore a received Upgrade header field if it wishes to continue using the current protocol on that connection.

Upgrading sounds great, but actually it introduces great troublesome during implementation and integration. I will state them later.

Integration H2C in Go Language¶

Go provides the golang.org/x/net/http2/h2c package to support h2c. It supports both prior knowledge and upgrading mechanism features during protocol negotiation. If none of them is satisfied, HTTP1.1 is used as fallback. The usage is very simple and you just need to put your HTTP handlers with an HTTP/2 server and then the library will help you to do the protocol negotiation.

Note that the HTTP/2 and HTTP1.1 server listens at the same port, and it will choose the protocol according to the requests sent by clients.

Currently, both prior knowledge and upgrading mechanism are supported in h2c library. For our internal usage, the upgrading feature is useless while having potential problem such as http2 smuggle. I raised a PR to the h2c package.

Upgrading Mechanism Was Deprecated¶

RFC has deprecated the upgrading mechanism since rfc9113, and it reads:

The "h2c" string was previously used as a token for use in the HTTP Upgrade mechanism's Upgrade header field (Section 7.8 of [HTTP]). This usage was never widely deployed and is deprecated by this document. The same applies to the HTTP2-Settings header field, which was used with the upgrade to "h2c".

Due to "usage was never widely deployed", RFC deprecated it. Based on this, Go team decided to support h2c in std library with prior knowledge only.

Problems in H2C Upgrading Mechanism¶

The main problems of H2C concentrated in the upgrading feature, both from the integration and implementations. Here, I will introduce the problems one by one. The implementation difficulties are summarized by github issues.

HTTP/2 Smuggle¶

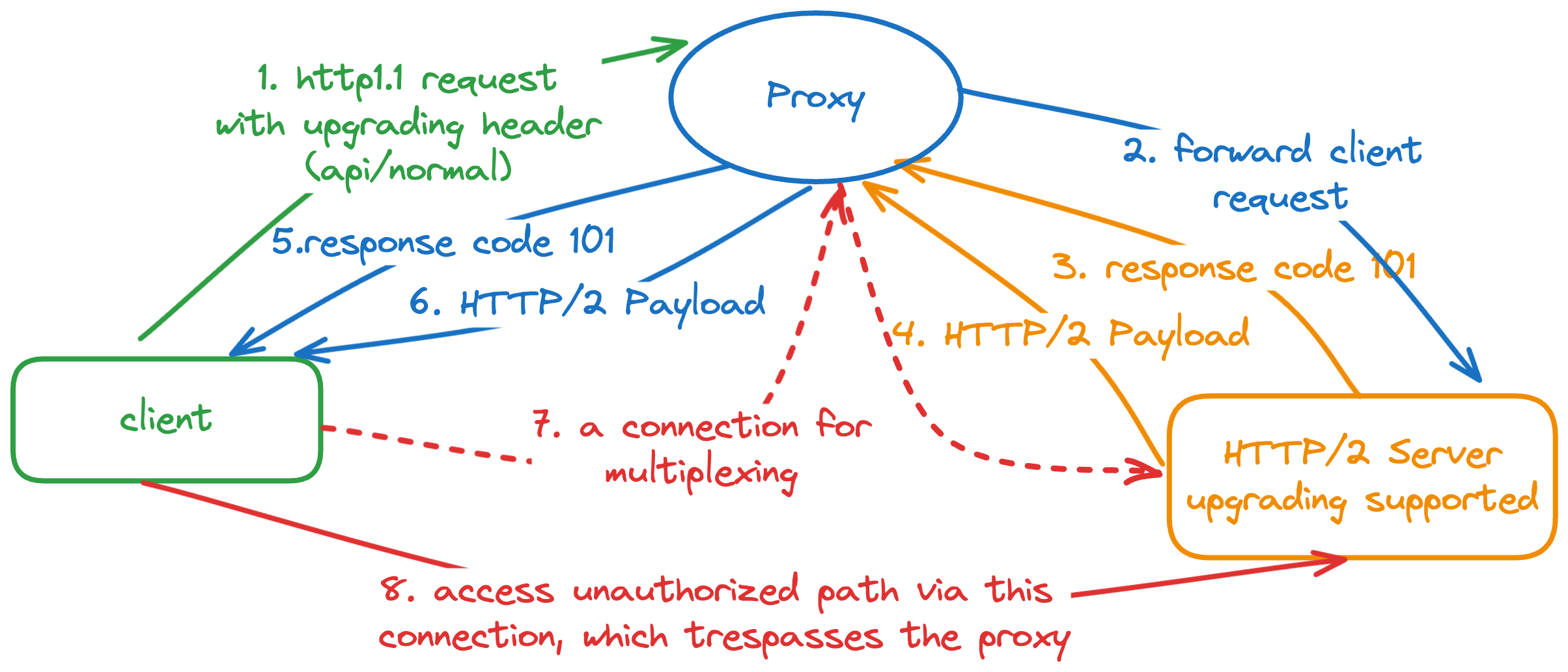

HTTP/2 smuggle happens under the proxy case, which allows the attacker to visit the unauthorized resource via HTTP/2 upgrading.

Let's say the api/normal could be accessed by any users but the api/flag is limited to the authorized users only. The access control is done via the proxy such as nginx. Note that the proxy must support HTTP/2 as well. The procedures of HTTP/2 smuggle are described as below:

- client sends a request with upgrading header to the proxy

- the proxy checks the accessibility, and forwards it blindly to the server

- server found it's an HTTP1.1 with upgrading header, so sends a

101response code with HTTP/2 payload. - the proxy forwards the response and forwards the payload

- a long live connection is established

- client request unauthorized resource such as

api/flagdirectly, which trespasses the limitation of proxy.

The HTTP/2 smuggle issue happened in several famous cloud providers such as Azure and Amazon. The final solution is to stop forwarding upgrading header during proxy.

Implementation Issue¶

TODO: should be fixed after checking the implementation of go h2c.

Go H2C Package Specific Notes¶

ResponseWriter Implementation¶

Although http.ResponseWriter only marks three methods from its interface, the underlying implementation satisfies much more interfaces.

type ResponseWriter interface {

Header() Header

Write([]byte) (int, error)

WriteHeader(statusCode int)

}

For example, the standard http.Server implements the ResponseWriter by a structure response, which supports several interfaces listed below besides the http.ResponseWriter:

- io.ReaderFrom

- http.Flusher

- http.Hijack

- Deprecated: http.CloseNotifier

However, when you support h2c, the response writer doesn't satisfy all interfaces listed above. H2C defines its own responseWriter, which support these interfaces:

- stringWriter with method

WriteString(s string) (n int, err error) - http.Flusher

- http.Pusher

- Deprecated: http.CloseNotifier

Supported interfaces overlap somehow but not all the same. Hence, if you want to upgrade the http1.1 to http/2 transparently in your framework, please pay attention to the methods of your response writer implementation. Don't expose additional interface if you don't want to user use it, otherwise it will block you support the http/2 silently as a library maintainer.

For example, a company HTTP server library shouldn't expose the Hijicker for its response writer because we don't recommend users to hijack the connection for another protocol such as web socket. By the way, the basic(and probably only) usage of Hijacker is for the websocket. Besides, the custom protocol on the top of tcp will use it as well.

Use MaxBytesHandler to Limit Memory Consuming¶

To avoid the huge memory consuming, use MaxBytesHandler(supported since go1.18) is a good idea. This is caused by the upgrading issue, which is mentioned above.

The first request on an h2c connection is read entirely into memory before the Handler is called. To limit the memory consumed by this request, wrap the result of NewHandler in an http.MaxBytesHandler.

Use Default or Max Value for MaxReadFrameSize¶

Small frame size will make the HTTP/2 much slower when the packets are large. Adjusting read frame size is a workaround according to robaho, who provides a fix to speed up by adjust the MaxReadFrameSize up.

Http2 is a multiplexed protocol with independent streams. The Go implementation uses a common reader thread/routine to read all of the connection content, and then demuxes the streams and passes the data via pipes to the stream readers. This multithreaded nature requires the use of locks to coordinate. By managing the window size, the connection reader should never block writing to a steam buffer

- but a stream reader may stall waiting for data to arrive

- get descheduled - only to be quickly rescheduled when reader places more data in the buffer

- which is inefficient

Here, I tried to use minimum size 1<<14(16KB), default size 1<<20(1MB) and the maximum size 1<<24-1(16MB) for benchmark both in client side and server side. I used my fork version to do so. In short, minimum size reduces the speed a lot, no big differences between default size and maximum size of frame size.

Client uses the default frame size.

receiving data with HTTP/1.1

server sent 10000000000 bytes in 1.585451333s = 50.5 Gbps (262144 chunks)

client received 10000000000 bytes in 1.586012917s = 50.4 Gbps,

61089 write ops, 4194304 buff

receiving data with HTTP/2.0

server sent 10000000000 bytes in 7.814963583s = 10.2 Gbps (262144 chunks)

client received 10000000000 bytes in 7.814950583s = 10.2 Gbps,

350461 write ops, 1097728 buff

Client uses the default frame size.

receiving data with HTTP/1.1

server sent 10000000000 bytes in 1.818575375s = 44.0 Gbps (262144 chunks)

client received 10000000000 bytes in 1.818641125s = 44.0 Gbps,

51443 write ops, 4194304 buff

receiving data with HTTP/2.0

server sent 10000000000 bytes in 2.281615958s = 35.1 Gbps (262144 chunks)

client received 10000000000 bytes in 2.281870584s = 35.1 Gbps,

33828 write ops, 3670016 buff

Client uses the default frame size.

receiving data with HTTP/1.1

server sent 10000000000 bytes in 1.812906041s = 44.1 Gbps (262144 chunks)

client received 10000000000 bytes in 1.813315209s = 44.1 Gbps,

45279 write ops, 4194304 buff

receiving data with HTTP/2.0

server sent 10000000000 bytes in 2.246520667s = 35.6 Gbps (262144 chunks)

client received 10000000000 bytes in 2.246826917s = 35.6 Gbps,

34718 write ops, 3407872 buff

Server uses the default frame size.

receiving data with HTTP/1.1

server sent 10000000000 bytes in 1.609520875s = 49.7 Gbps (262144 chunks)

client received 10000000000 bytes in 1.609604959s = 49.7 Gbps,

60632 write ops, 4194304 buff

receiving data with HTTP/2.0

server sent 10000000000 bytes in 7.280920417s = 11.0 Gbps (262144 chunks)

client received 10000000000 bytes in 7.280909459s = 11.0 Gbps,

379399 write ops, 770048 buff

receiving data with HTTP/1.1

server sent 10000000000 bytes in 1.912083542s = 41.8 Gbps (262144 chunks)

client received 10000000000 bytes in 1.912116084s = 41.8 Gbps,

47703 write ops, 4194304 buff

receiving data with HTTP/2.0

server sent 10000000000 bytes in 2.530800125s = 31.6 Gbps (262144 chunks)

client received 10000000000 bytes in 2.531025542s = 31.6 Gbps,

31597 write ops, 3932160 buff

H2C isn't always Faster¶

HTTP2 doesn't perform well when sending only a few requests with large body, as the golang/go#47840 shows.

In short, it talks HTTP1.1 is much faster for a single request with a 10GB body. HTTP/2 is slower under such case because the implementation and the protocol itself.

However, single request is not a common case and multiple requests are the real world. Under multiple request scenarios, the HTTP/2 performs better than HTTP1.1. Hence, the issue mentioned above won't a problem when trying to support HTTP/2 in your system.

The result of throughout speed benchmark for multiple requests is put under my fork repo, can visit the readme to learn more about the result:

Send H2C Request by Client¶

Even though the default http client helps to negotiate the HTTP protocol for the server, you may want to send HTTP/2 request out directly without compatibility with HTTP1.1. To do so, you need to set up the HTTP client shown below. The two items means that don't negotiate protocol via TLS while the DialTLSContext allows to establish a plaintext connection instead of an encrypted TLS connection.

var r http.Request // initialize an http request first

h2cCli := &nethttp.Client{

Transport: &http2.Transport{

// enable it because the server is a h2c server

AllowHTTP: true,

// establish a plain connection instead of

DialTLSContext: func(_ context.Context, network, addr string, cfg *tls.Config) (net.Conn, error) {

return net.Dial(network, addr)

},

},

}

_, err = h2cCli.Do(r)

Conclusion¶

Even though this blog introduces quite a lot about the h2c migration, finally there are not many problems you need to concern. I summarize some checklist below if you would like to support h2c in your backend service as well.

- Please use

h2cwith theMaxBytesHandlerfrom http package since go1.18 - Pay attention to the additional satisfaction interfaces by the implementation of

ResponseWriter - Check whether your gateway could prevent the HTTP2 smuggle if you use

h2cpackage which allows upgrading mechanism - Check whether the infrequent large requests is your case. If so, HTTP/2 is not a good idea to you.